Title

Create new category

Edit page index title

Edit category

Edit link

On-premise Installation

Prerequisites

Supported Operating Systems

- CentOS - Versions 6.6+, 7.3

- Red Hat Enterprise Linux - Versions 7.5

- Ubuntu - Version 16.04 (supported for HDInsight only)

- Debian - 8.1 (supported for DataProc only)

- SUSE Linux Enterprise Server - Version 12

- EMR Operating System - Amazon Linux

Supported Hadoop Distributions

- HDP - Versions 2.5.5, 2.6.4, 3.x

- MAPR - Version 6.0.1

- Azure - HDI 3.6

- GCP - 1.2 (Unsecured), 1.3 (Secured) Dataproc

- EMR - Version 5.17.0

Installation Procedure

Perform the following:

Step 1: Download and Extract Installer

- Navigate to a temporary directory:

cd <temporary_installer_directory> - Download the installer tar ball by running the following command:

wget <link-to-download>

NOTE: Contact support@infoworks.io to get the <link-to-download>.

Step 2: Extract the installer by running the following command: tar -xf deploy_<version_number>.tar.gz

Step 3: Navigate to the installer directory by running the following command: cd iw-installer

This creates a directory named iw-installer.

Step 4: Configure installation

- Run the following command:

./configure_install.sh

Enter the details for the following queries prompted:

- Hadoop distribution name and installation path (If not auto-detected).

- Infoworks user

- Infoworks user group

- Infoworks installation path where you need to install Infoworks. This location will be referred as IW_HOME.

- Infoworks HDFS home (path of home folder for Infoworks artifacts)

- Hive schema for Infoworks sample data

- IP address for accessing Infoworks UI (when in doubt use the FQDN of the Infoworks host)

- HiveServer2 thrift server hostname: Hostname of the instance where the HiveServer2 service is running.

- Hive user name

- Hive user password

If Hadoop distro is GCP:

- Managed MongoDB URL, if the MongoDB is not managed on the same machine.

- Are Infoworks directories already extracted in IW_HOME?

Run Installation

Run the following command to run the Installation of Infoworks: ./install.sh -v <version_number>

NOTE: For machines without certificate setup, --certificate-check parameter can be entered as false as described in the following syntax: ./install.sh -v <version_number> --certificate-check <true/false>. The default value is true. If you set it to false, this performs insecure request calls. This is not a recommended setup.

To exclude a particular service, use the following command: --exclude-services cube-engine . For example, to exclude Cube engine, use ./install.sh -v <version_number> --exclude-services cube-engine

- For HDP, CentOS/RHEL6, replace <version-number> with 2.9.0-hdp-rhel6

- For HDP, CentOS/RHEL7, replace with 2.9.0-hdp-rhel7

- For MapR, CentOS/RHEL6, replace <version_number> with 2.9.0-rhel6

- For MapR, CentOS/RHEL7, replace <version_number> with 2.9.0-rhel7

- For Azure, replace <version_number>with 2.9.0-azure

- For GCP, replace <version_number>with 2.9.0-gcp

- For EMR, replace <version_number>with 2.9.0-emr

NOTE: To find the rhel version, run the following command: cat /etc/os-release or lsb_release -r

The installation logs are available in <temporary-installer-directory>/iw-installer/logs/installer.log

Silent Installation Procedure

To perform the installation offline, follow the steps below:

Step 1: Get the installer tar ball locally.

Step 2: Extract the installer by running the following command:tar -xf deploy_<version_number>.tar.gz

Step 3: Get the Infoworks DataFoundry tar ball.

Step 4: Run the following commands to place the Infoworks DataFoundry tar ball in the correct location:

mkdir iw-installer/downloads

cp infoworks-x.tar.gz iw-installer/downloads/

Step 5: Navigate to the installer directory by running the following command: cd iw-installer

Step 6: Go to Step 4 of the Installation Procedure.

Post Installation

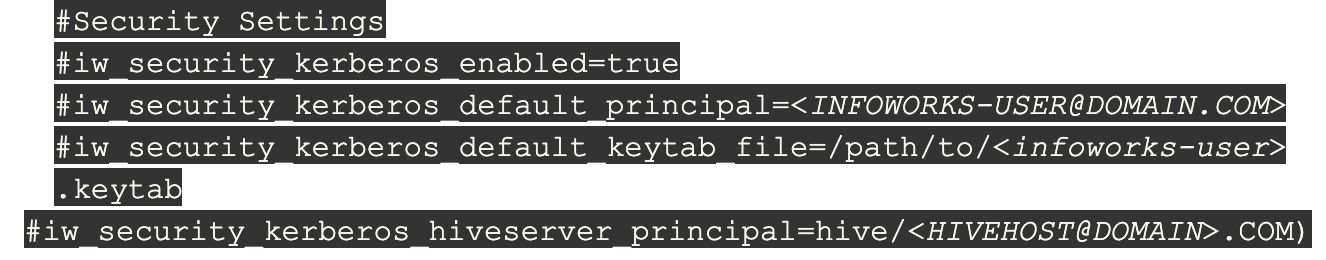

If the target machine is Kerberos enabled, performed the following post installation steps:

- Go to

<IW_HOME>/conf/conf.properties - Edit the Kerberos security settings as follows (ensure that these settings are uncommented):

NOTE: Kerberos tickets are renewed before running all the Infoworks DataFoundry jobs. Infoworks DataFoundry platform supports single Kerberos principal for a Kerberized cluster. Hence, all Infoworks DataFoundry jobs work using the same Kerberos principal, which must have access to all the artifacts in Hive, Spark, and HDFS.

For more details, refer to our Knowledge Base and Best Practices!

For help, contact our support team!

(C) 2015-2022 Infoworks.io, Inc. and Confidential