Title

Create new category

Edit page index title

Edit category

Edit link

Creating a Pipeline

Infoworks Data Transformation is used to transform data ingested by Infoworks DataFoundry for various purposes like consumption by analytics tools, pipelines, Infoworks Cube builder, export to other systems, etc.

For details on creating a domain, see Domain Management.

Following are the steps to add a new pipeline to the domain:

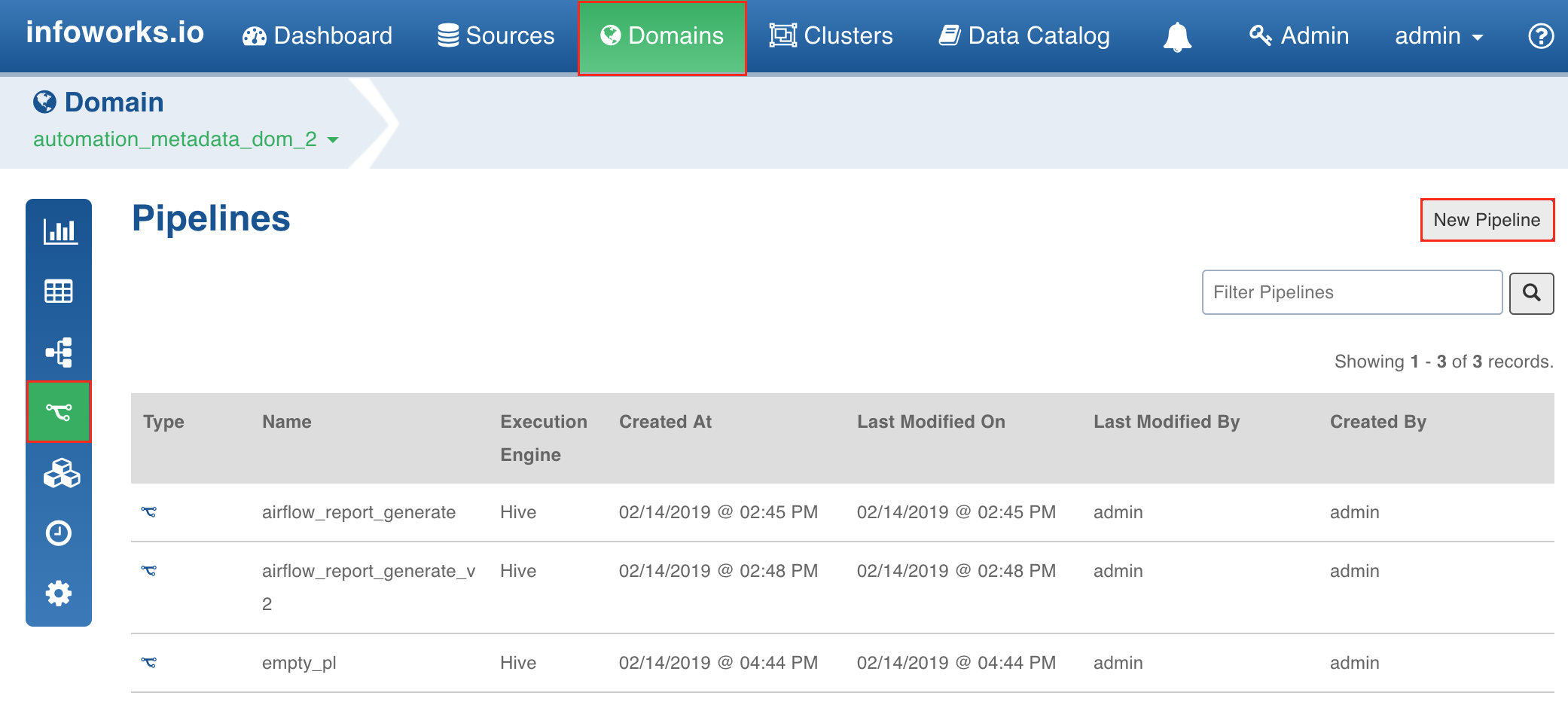

- Click the Domains menu and click the required domain from the list. You can also search for the required domain.

- In the Summary page, click the Pipelines icon.

- In the Pipelines page, click the New Pipeline button.

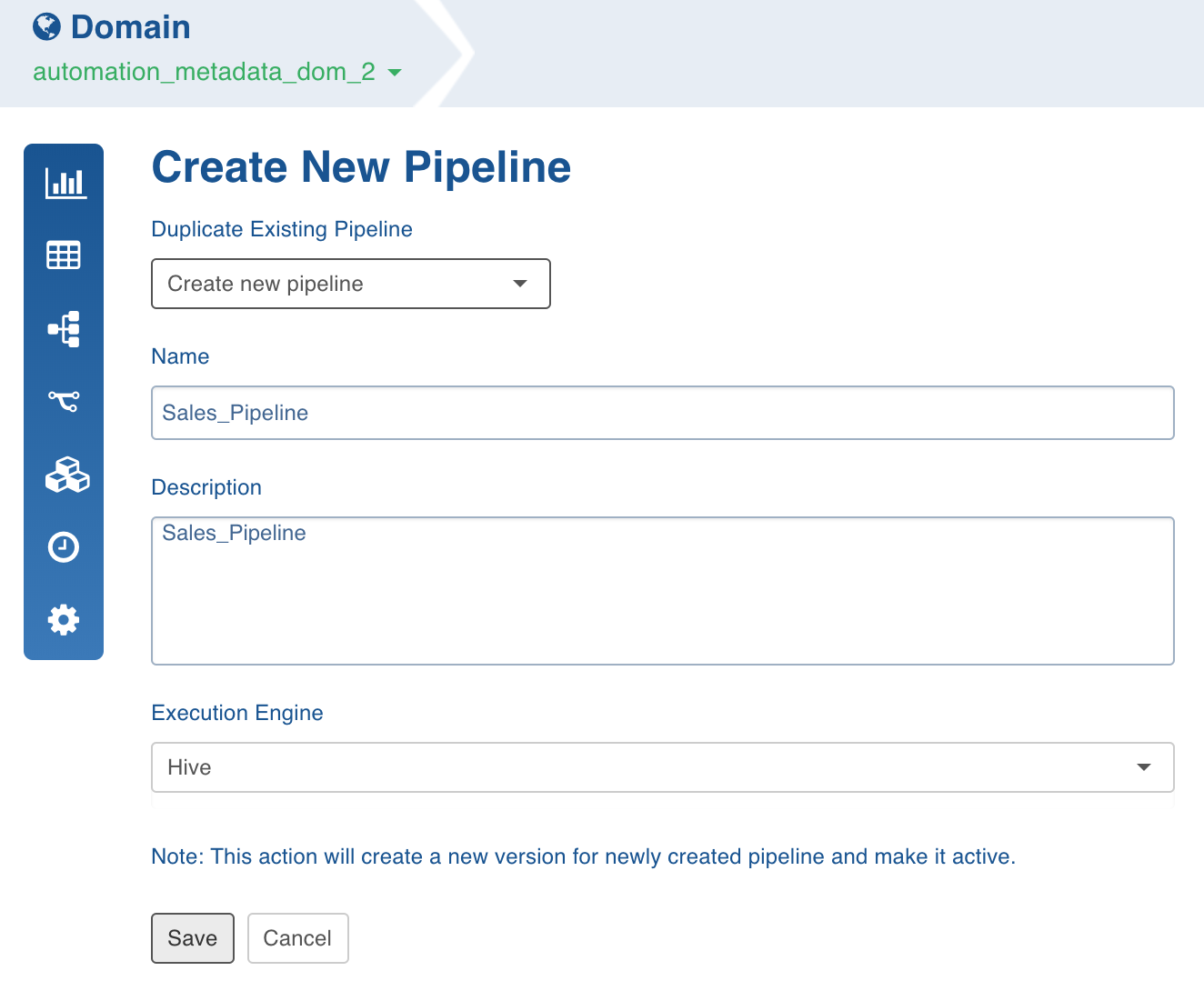

- In the New Pipeline page, select Create new pipeline.

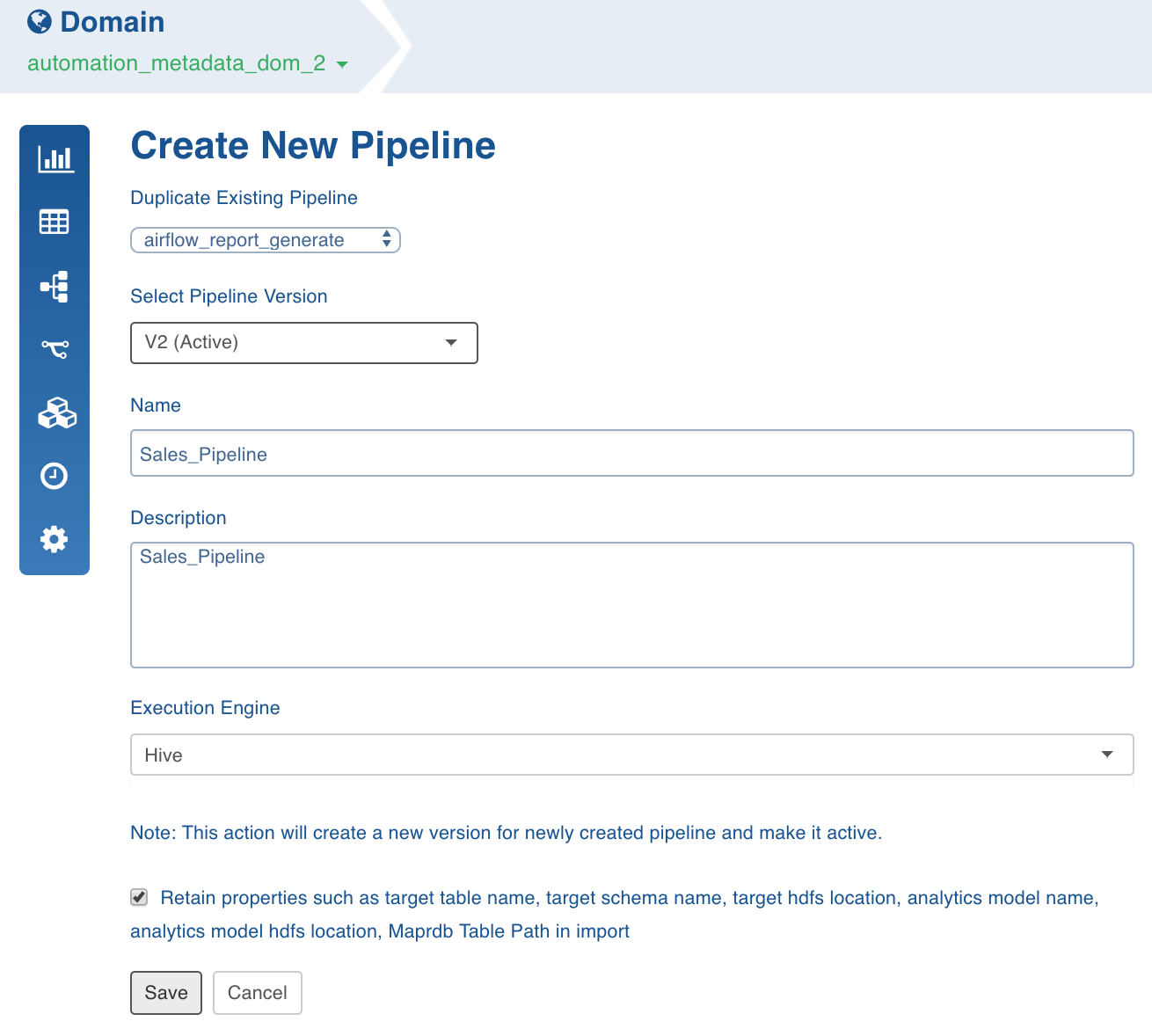

To duplicate an existing pipeline, select the pipeline from the Duplicate Existing Pipeline drop-down list and enable the checkbox to retain properties such as target table name, target schema name, target HDFS location, analytics model name, analytics model HDFS location, MapR-DB table path in import.

- Enter the Name and Description.

- Select the Execution Engine type. The Execution Engines supported are Hive, Spark and Impala.

- Click Save. The new pipeline will be added to the list of pipelines in the Pipelines page.

Using Spark: Currently v2.0 and higher versions of Spark are supported. Spark as execution engine uses the Hive metastore to store metadata of tables. All the nodes supported by Hive and Impala are supported by spark engine.

Known Limitations of Spark

- Parquet has issues with decimal type. This will affect pipeline targets that include decimal type. Recommend to cast any decimal type to double when using in a pipeline target

- The number of tasks for reduce phase can be tuned by using sql.shuffle.partitions setting. This setting controls the number of files and can be tuned per pipeline with dt_batch_sparkapp_settings in UI.

- Column names with spaces are not supported in Spark v2.2 but supported in v2.0. For example, column name as ID Number is not supported in spark v2.2.

Submitting Spark Pipelines

Spark pipeline can be configured to run in client or cluster mode during pipeline creation. In client mode, the Spark pipeline job runs on the edge node and in cluster mode it runs on yarn mode.

Following are the steps to configure a pipeline in client or cluster mode:

- In the Create New Pipeline page, select the Client/Cluster Mode from the drop-down list.

- In the ${IW HOME}/conf/conf.properties file, add

${SPARK_HOME}/conf/to thedt_batch_classpathanddt_libs_classpathconfiguration values. - Ensure that the

iw_hdfs_homeconfiguration is set in the${IW_HOME}/conf/conf.propertiesfile.

dt_classpath_include_unique_jars= true in the ${IW_HOME}/conf/conf.properties file.

Ensure the following in the {IW_HOME}/conf/${dt_spark_configfile_batch} file:

- The spark.driver.extraJavaOptions property must not be null. Set the value to empty if no Java options are required.

- If Spark jars are specified in the spark.yarn.jars property, the jars must point to the local file system where the Spark driver is running (spark.yarn.jars local:

<path to spark jars>).

<path to spark jars> is not configured on each Yarn node.

- No duplicate Spark configurations must be present in the

{IW_HOME}/conf/${dt_spark_configfile_batch}file.

Submitting Spark Pipeline through Livy

Spark pipeline jobs can also be submitted via Livy.

Following are the steps to submit Spark pipeline job via Livy :

- Add the following key-value pair in pipeline advance configuration:

job_dispatcher_type=livy - Create a livy.properties file.

- In the

$IW_HOME/conf/conf.propertiesfile, setdt_livy_configfile=(absolute path of livy.properties). - Set the following configurations in the livy.property file:

livy.url=https://<livy_host>:<livy_port>

If Livy is configured to run in yarn-cluster mode, create a IW_HOME directory on HDFS, copy the ${IW_CONF} directory from local to IW_HOME on HDFS and set spark.driver.extraJavaOptions=-DIW_HOME=(HDFS_IW_HOME path) in the livy.property file.

If Livy is Kerberos enabled, set the following configurations:

- livy.client.http.spnego.enable=true

- livy.client.http.auth.login.config=(Livy client Jaas file location)

- livy.client.http.krb5.conf=(krb5 conf file)

You can also add other optional Livy client configurations, for details see livy-client.conf.template.

By default, the Spark pipelines are submitted in the existing Livy session. If a Livy session is not available, a new session is created and pipelines are submitted in the new session. Spark pipeline cannot share a Livy session created by any other process and if Livy sessions are shared among other processes, set livy_use_existing_session=false in livy.properties.

A Livy client Jass file must include the following entries:

Client { com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true keyTab="<user keytab>" storeKey=true useTicketCache=false doNotPrompt=true debug=true principal="<user principal>" ;};NOTE: Infoworks Data Transformation is compatible with livy-0.5.0-incubating and other Livy 0.5 compatible versions.

Yarn Queue for Batch Build

dt_batch_hive_settings and dt_batch_sparkapp_settings respectively, in the pipeline settings.

The configurations like memory, cores, mapper memory, etc can also be set using advanced configurations. For more details, see Hive Configuration Properties and Spark Configurations.

Following are the configurations to add the YARN queue name:

HIVE

- Tez: hive.mapred.job.queue.name=

<NAME> - MR: hive.tez.queue.name=

<NAME>

SPARK

- spark.queue.name=

<NAME>

Best Practices

For best practices, see General Guidelines on Data Pipelines.

For more details, refer to our Knowledge Base and Best Practices!

For help, contact our support team!

(C) 2015-2022 Infoworks.io, Inc. and Confidential