Title

Create new category

Edit page index title

Edit category

Edit link

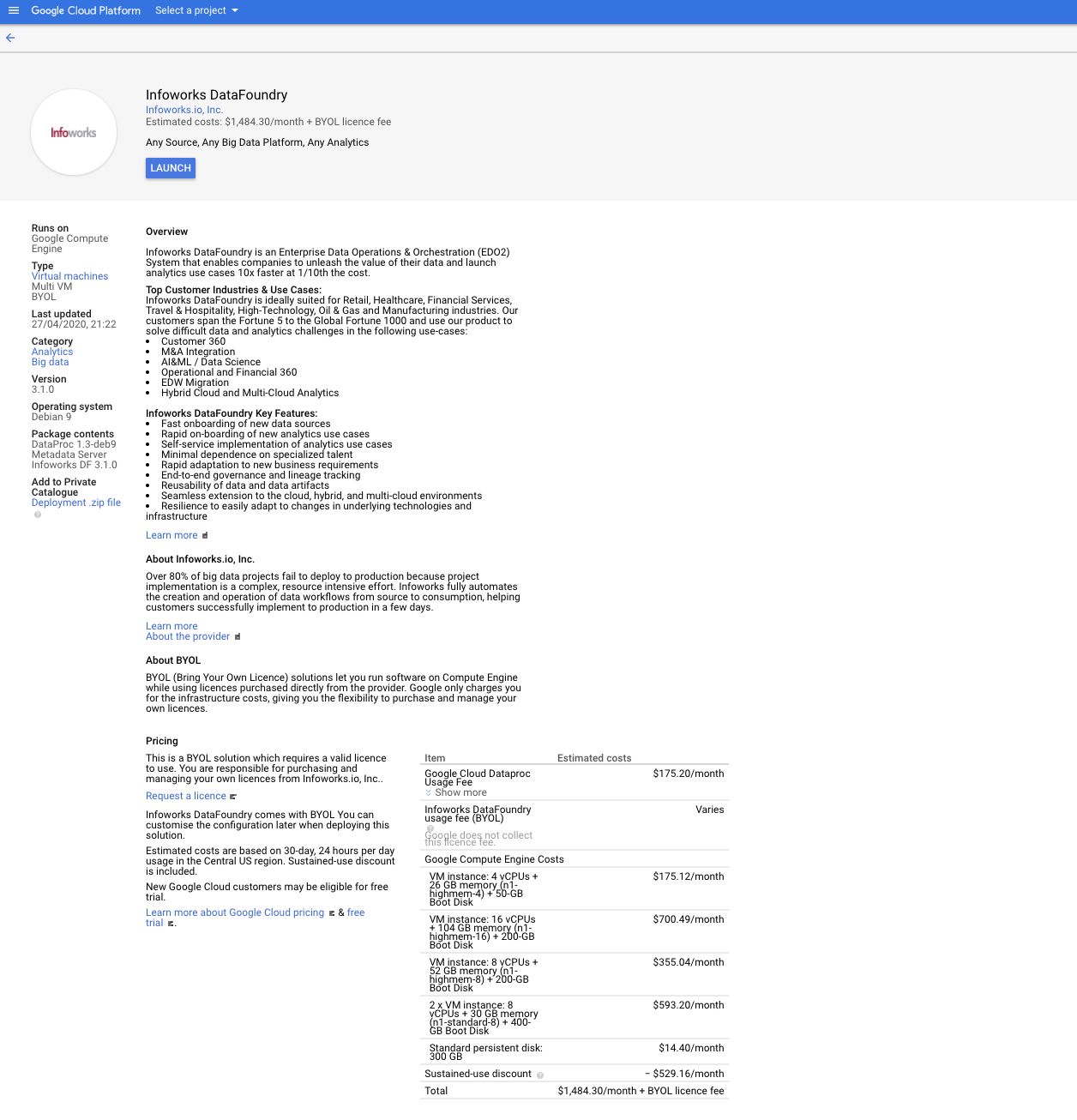

Launching and Configuring Infoworks DataFoundry

Perform the following steps to launch and configure Infoworks DataFoundry

- Select Infoworks DataFoundry from the Google Cloud Platform Marketplace console.

- Click LAUNCH. The following screen is displayed.

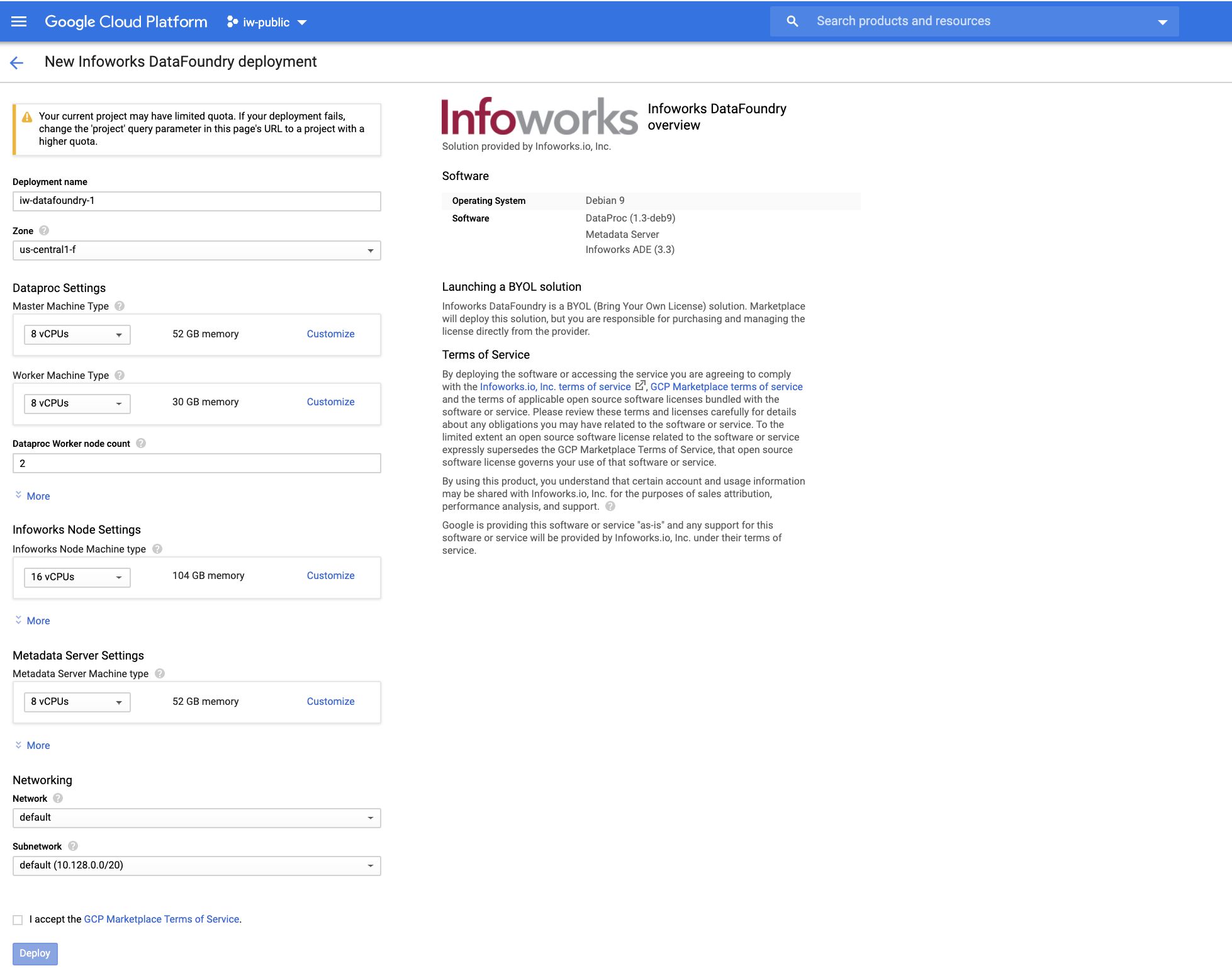

Deploying Infoworks DataFoundry

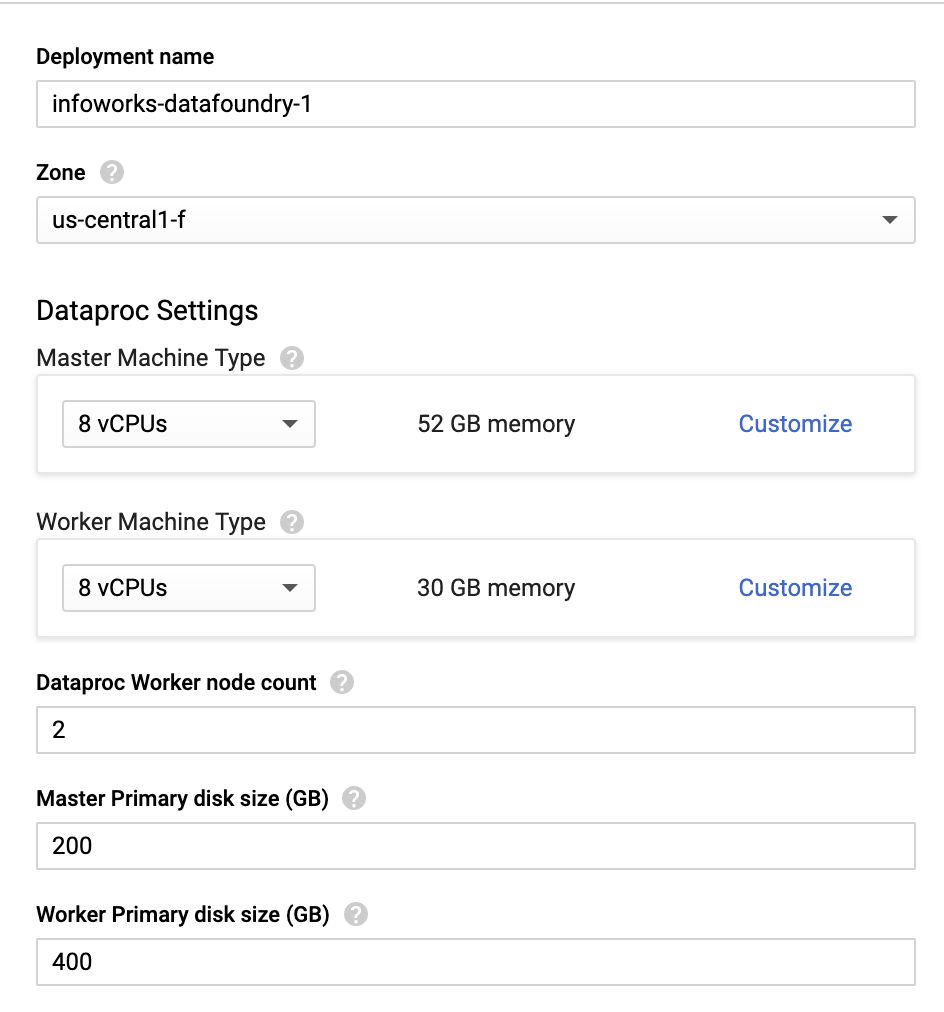

Configure the settings for the following machines:

- Deployment name - Enter the name for your deployment instance.

- Zone - The zone of the Dataproc hadoop cluster.

Dataproc Settings: Allows configuring the Dataproc hadoop cluster.

- Master Machine Type - This machine type determines the specifications of your machines such as the amount of memory and virtual cores that the Dataproc master instance will have. Master services run here.

- Worker Machine Type - This machine type determines the specifications of your machines such as the amount of memory and virtual cores that a Dataproc worker instance will have. Worker services run here.

- Dataproc Worker node count - Number of Dataproc worker nodes in the cluster. Minimum value must be 2.

- Master Primary disk size (GB) - Size of the user's Dataproc Master disk.

- Worker Primary disk size (GB) - Size of the user's Dataproc Worker disk.

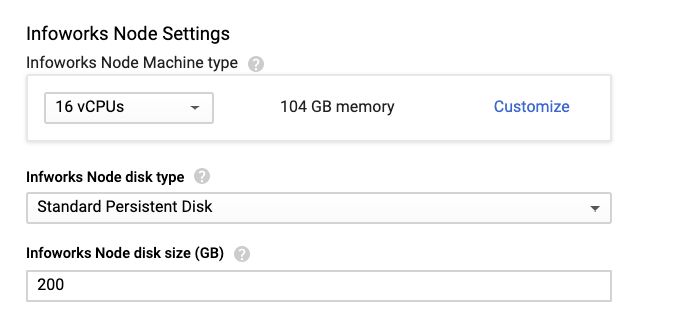

Infoworks Node Settings

- Infoworks Node Machine Type- This machine type determines the CPU and memory specifications of your Infoworks DataFoundry instance.

- Infoworks Node Disk Type - This is the disk type of your Infoworks edge node. The valid values are SSD or Standard.

- Infoworks Node Disk Size - This is the disk size of your Infoworks edge node.

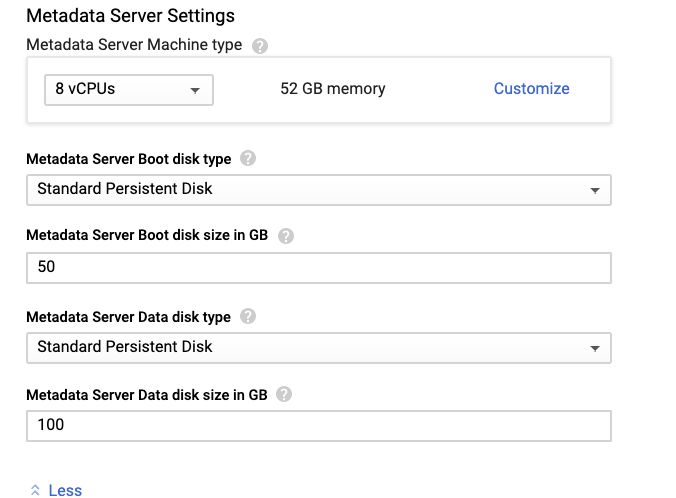

Metadata Server Settings

- Metadata Server Machine Type - This machine type determines the CPU and memory of the metadata server for Infoworks DataFoundry.

- Metadata Server Boot disk type - This is the type of metadata server boot disk.

- Metadata Server Boot disk size in GB - This is the size of metadata server boot disk.

- Metadata Server Data disk type - This is the type of metadata server data disk.

- Metadata Server Data disk size in GB - This is the size of metadata server data disk. Infoworks DataFoundry stores the metadata in this volume.

Networking

- Network - This network determines the network traffic the instance can access. This is the name of your Virtual Private Cloud (VPC) network.

- Subnetwork - This range assigns your instance an IPv4 address. Instances in different subnetworks can communicate with each other using their internal IPs as long as they belong to the same network.

NOTE: The Dataproc cluster might require significant resources and extension of quota for the zone being used for the deployment.

- Now, Agree the GCP Marketplace Terms of Service, and then click Deploy.

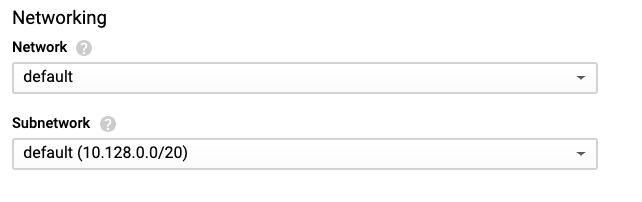

The following page is displayed.

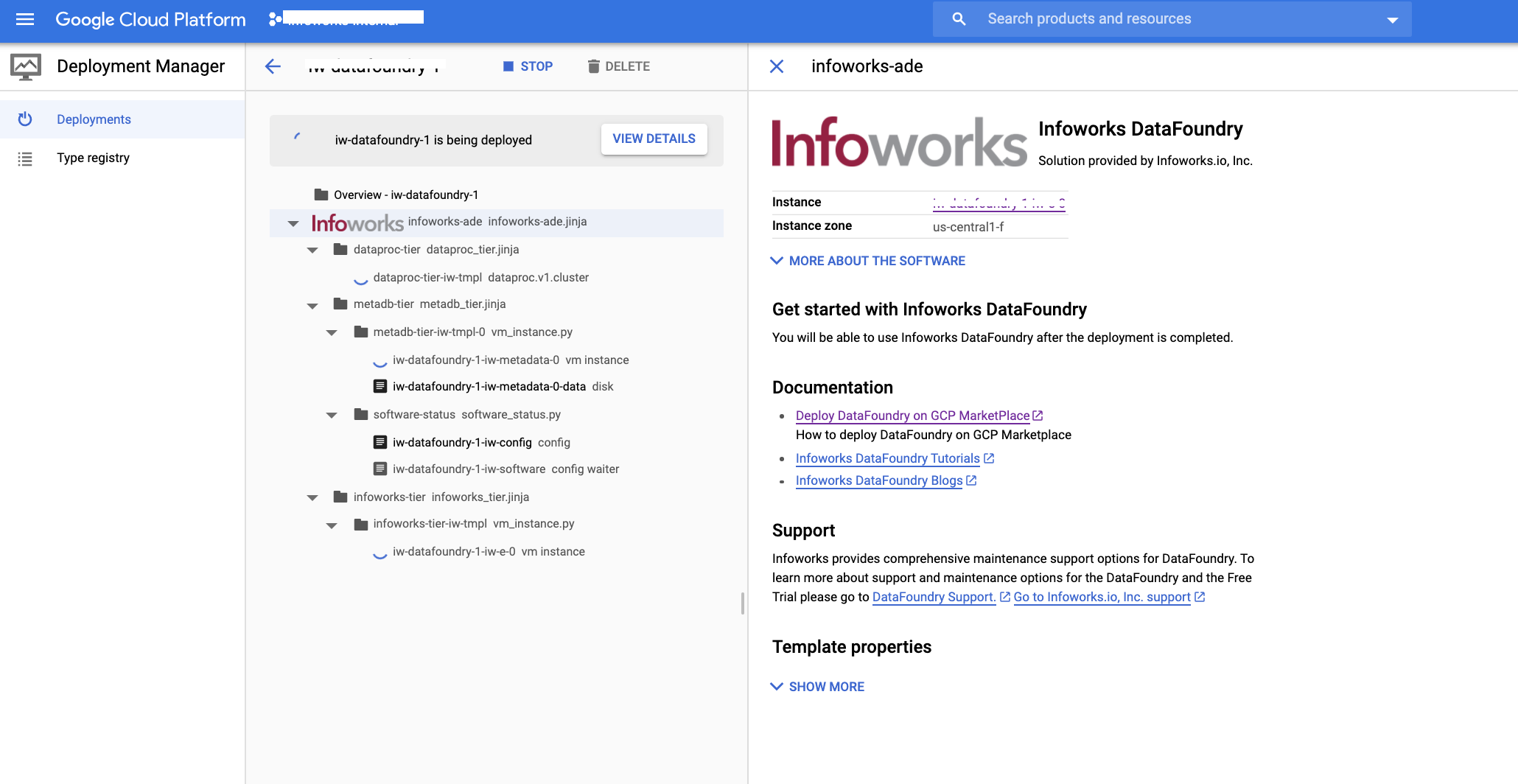

After being deployed, the following page is displayed, which also include the following:

- SSH - Allows you to login to the Infoworks server.

- WEB INTERFACE - Allows you to access the Infoworks DataFoundry via the web interface.

- Login to DataFoundry - Same as Web Interface. Allows you to access the Infoworks DataFoundry via the web interface.

Getting Started with Infoworks DataFoundry

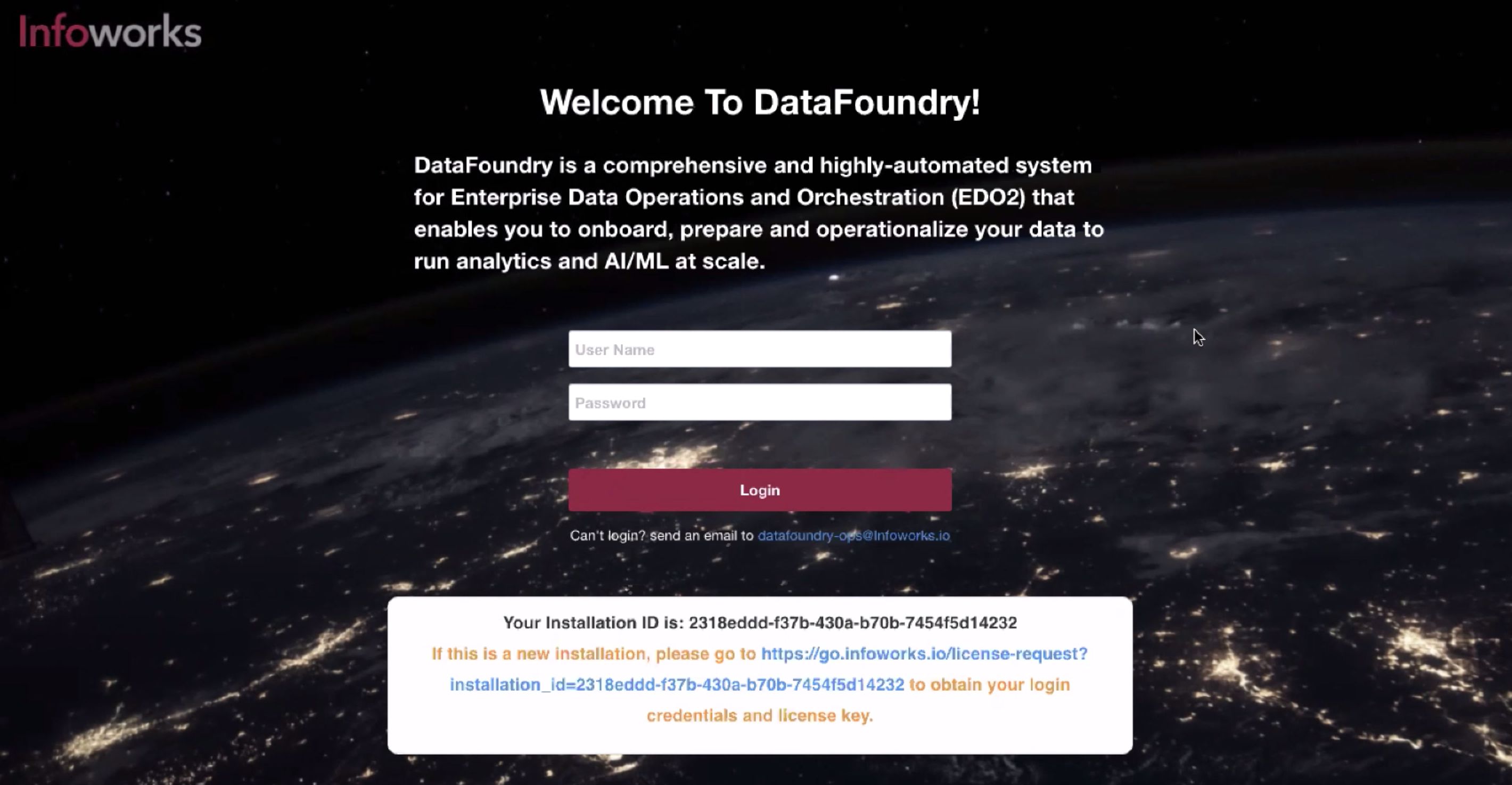

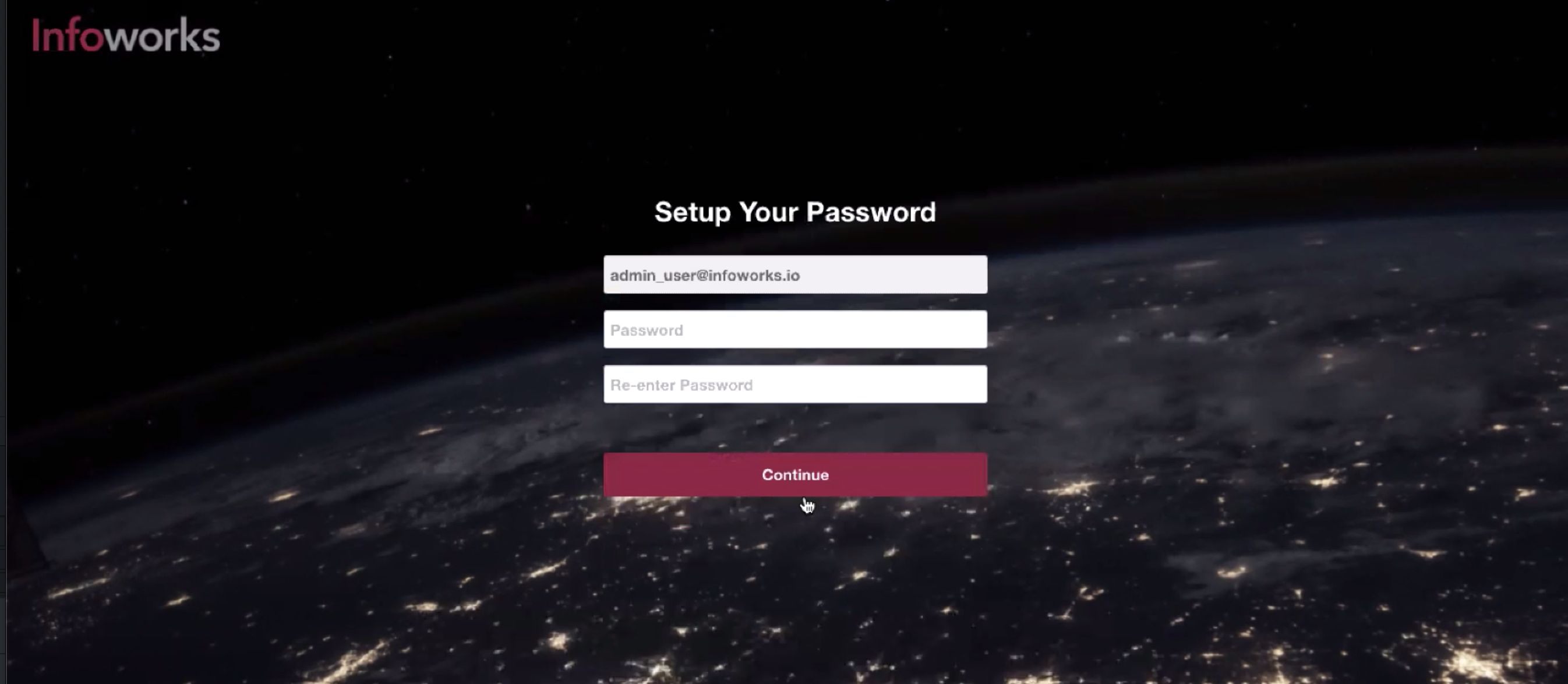

- Click WEB INTERFACE or Login to DataFoundry link. The following page appears.

NOTE: It takes upto 1-2 minutes after the previous page is displayed, for the services on the edge node to appear. It is recommended to click retry in case of a bad gateway error.

- To get started with Infoworks DataFoundry on the Google Cloud platform, you will need a license key. If you do not have the license key, or if this is a new installation, click the URL highlighted in blue. The following page is displayed.

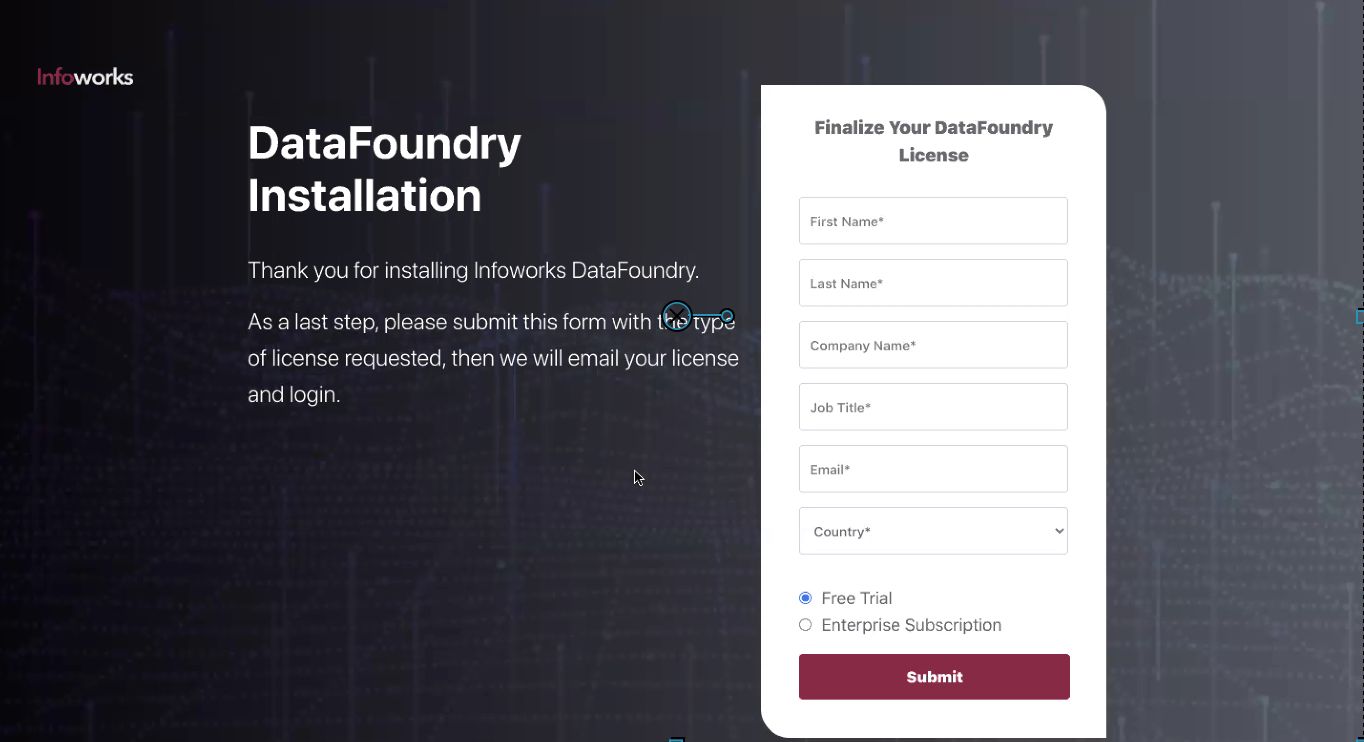

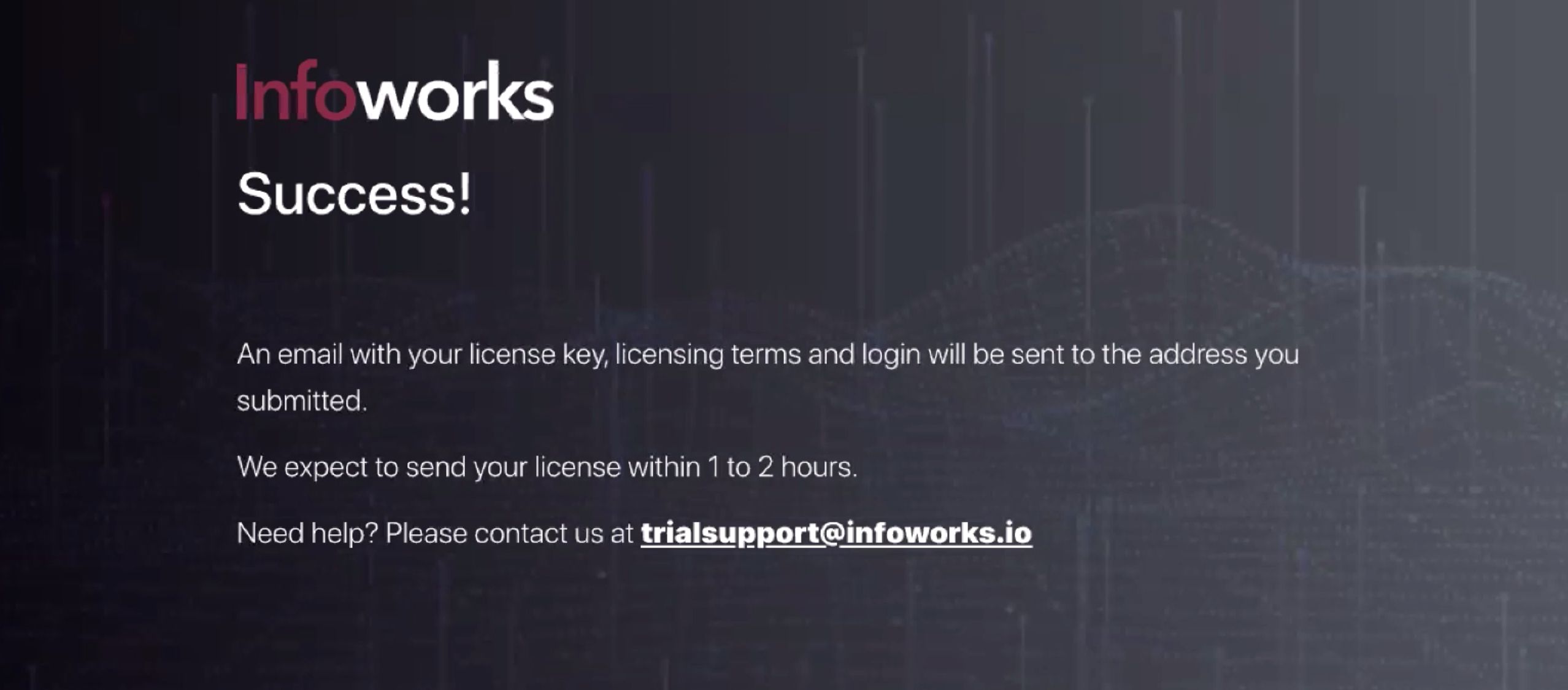

- Enter your details, choose your type of subscription, and then click Submit. The following page is displayed, and the license key will be sent to you in your registered email ID.

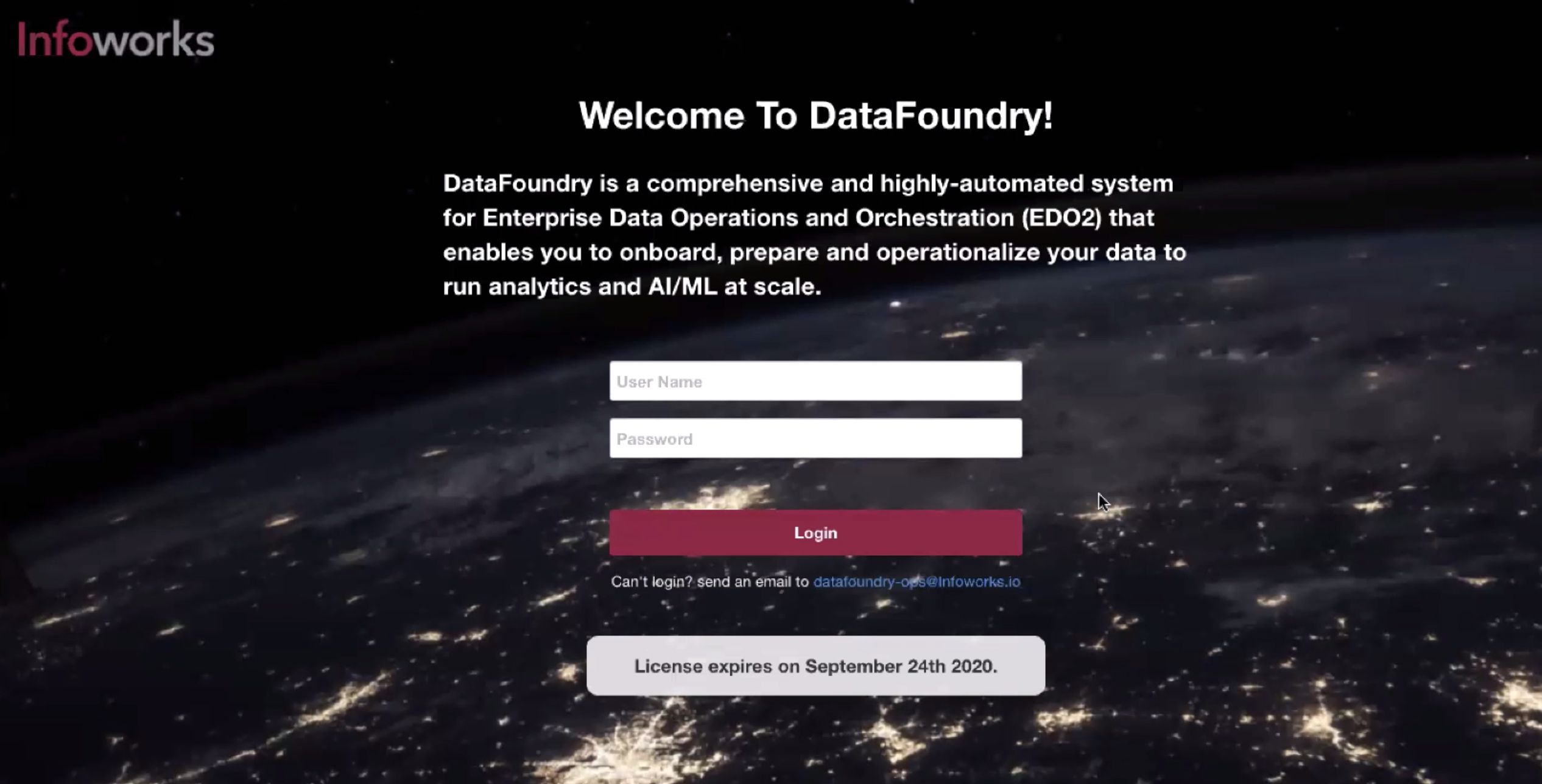

- Go back to the login page, and enter your credentials to login to Infoworks DataFoundry. Enter the default email ID is admin@infoworks.io and password is admin.

NOTE: From every second login, the login page displays the status of the expiry date of your subscription. On subscription expiry, the user will be logged out of Infoworks DataFoundry.

- After logging in, the user is redirected to reset the password. Now, enter the required password, re-enter it and then click Continue. This resets the password.

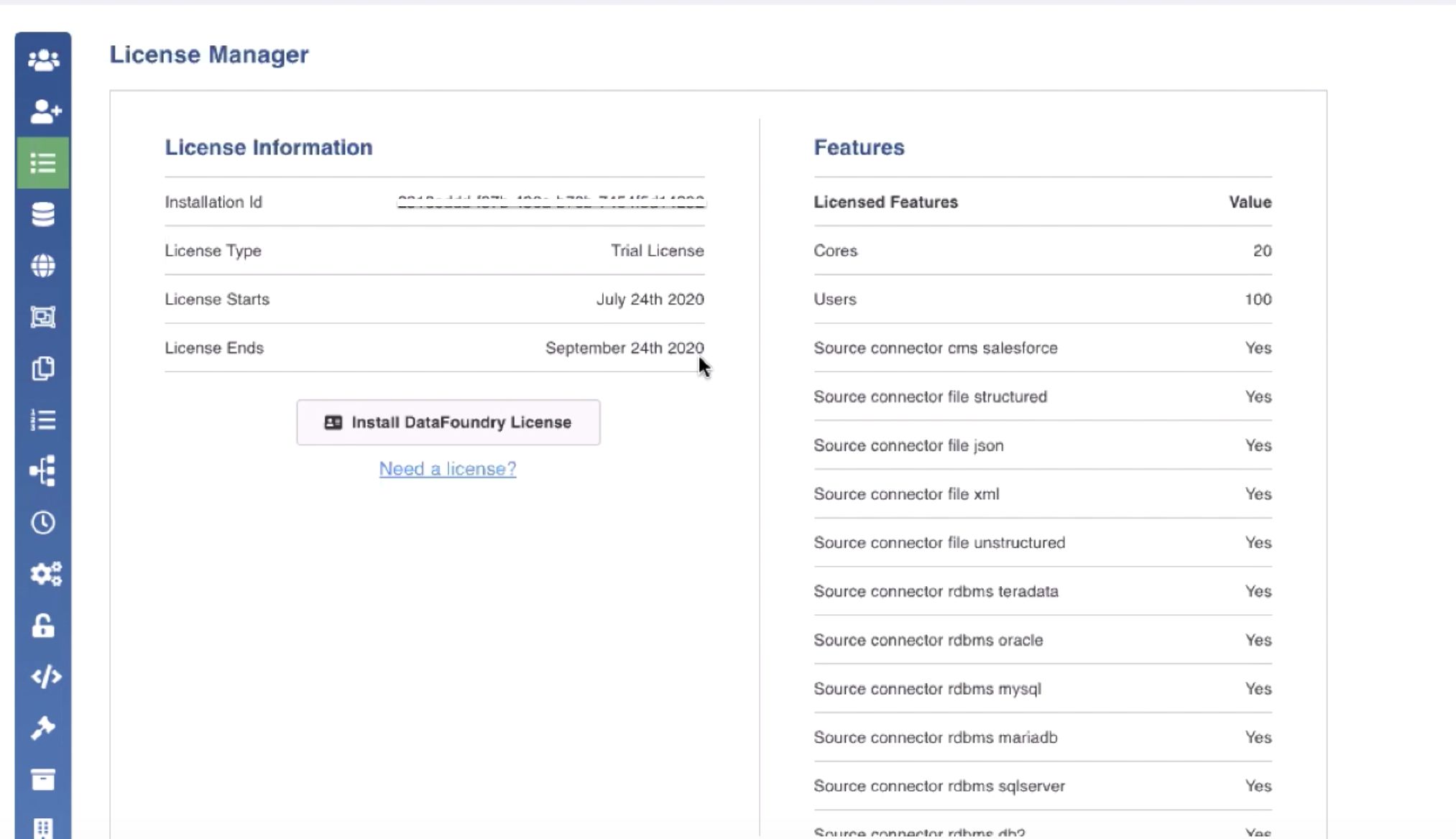

- On clicking Continue, the following page is displayed.

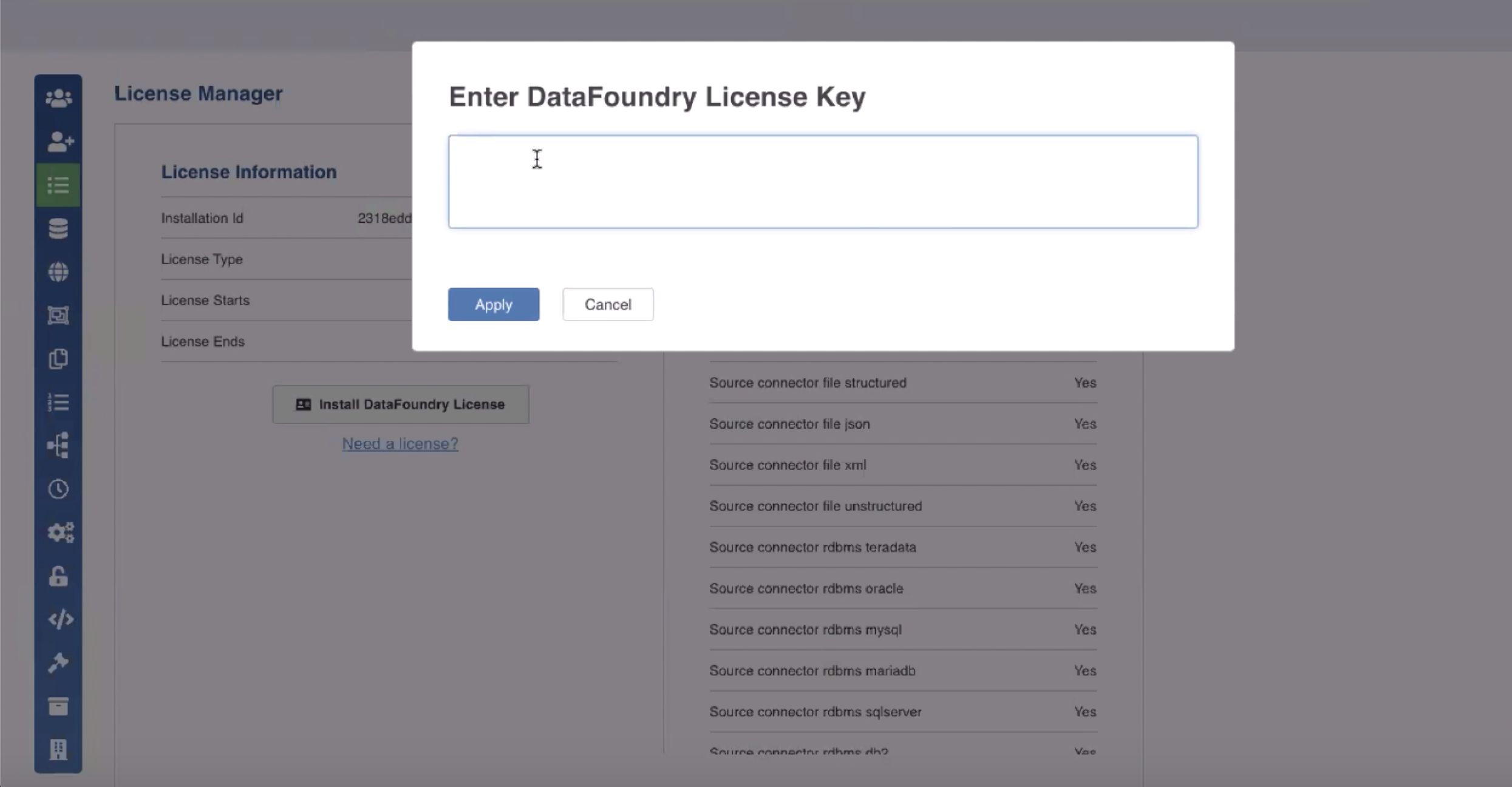

- Click Install DataFoundry License button to enter your license key, received in your registered email ID.

- Enter your license key and click Apply. To upgrade your license, you can click Need a license link, and initiate an email stating the request.

For instructions on license, see the License Management document.

- You can now start using Infoworks DataFoundry.

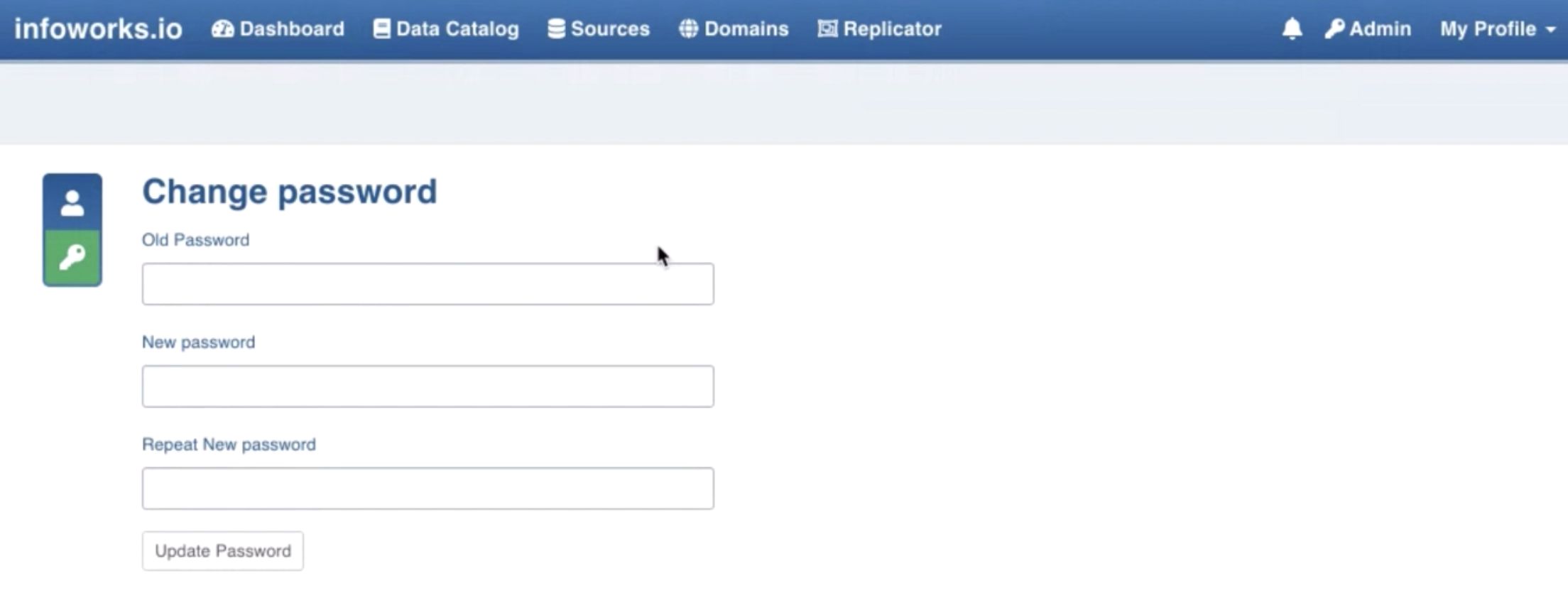

Changing Password

To change the password at any point of time while using Infoworks DataFoundry after the first password reset, perform the following steps:

- In the Infoworks DataFoundry, click My Profile > Settings and click the Change Password icon.

- Enter the old and new passwords and click Update Password. The password will be updated.

Advanced Configuration

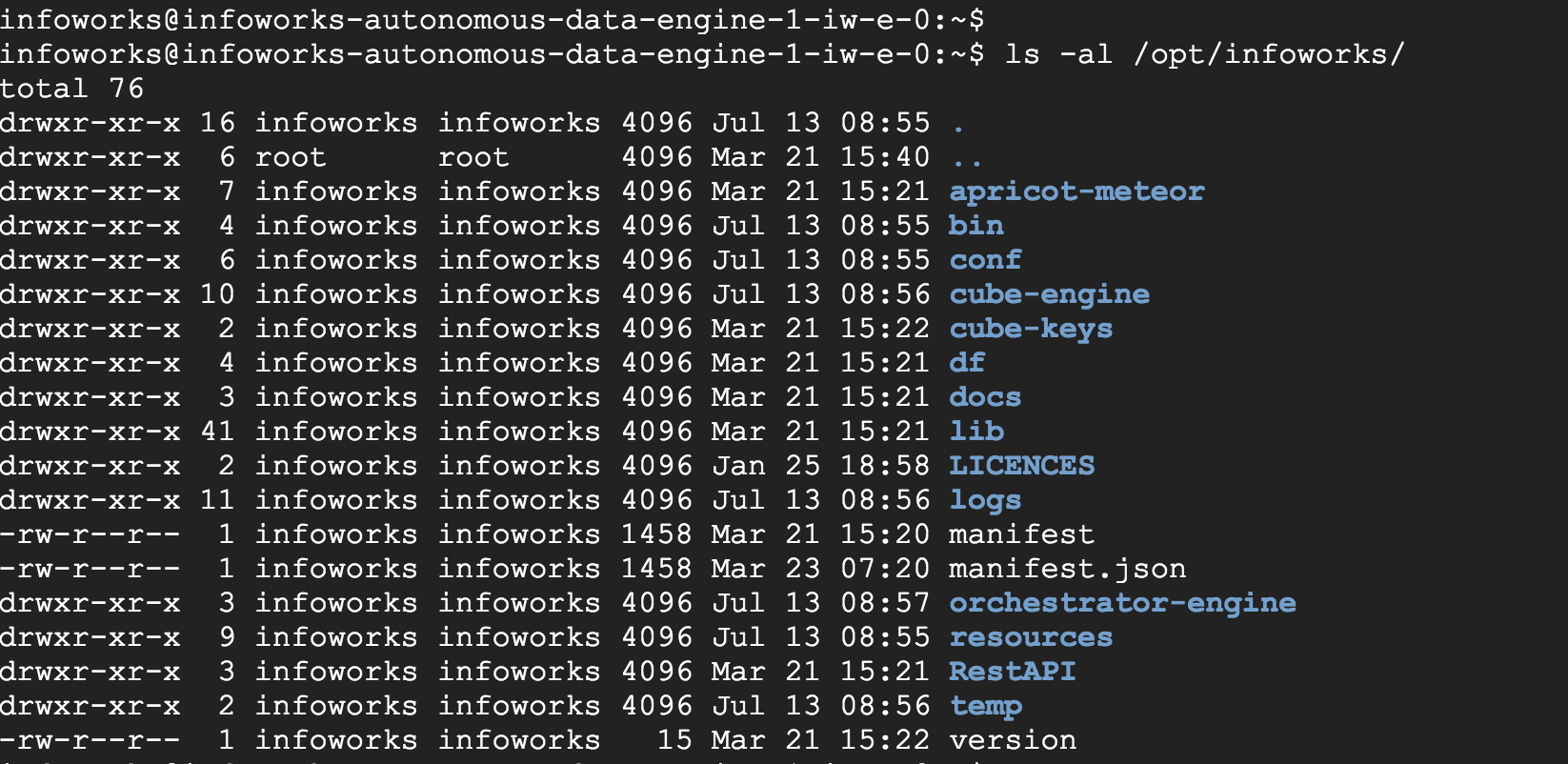

For advanced understanding of the system, view the settings in the configuration files as per the following instructions:

- Click the SSH option.

The command prompt of the Infoworks server will be displayed.

- The default Unix user for Infoworks is infoworks.

- Switch to this user using the command: sudo su – infoworks

- Navigate to the Infoworks configuration directory using the command: cd /opt/infoworks/conf

- The configurations files are located in this folder. The basic configurations are included in the conf.properties file.

- NOTE: It is recommended to add/overwrite a configuration parameter using Infoworks Web Interface only (Admin > Configuration).

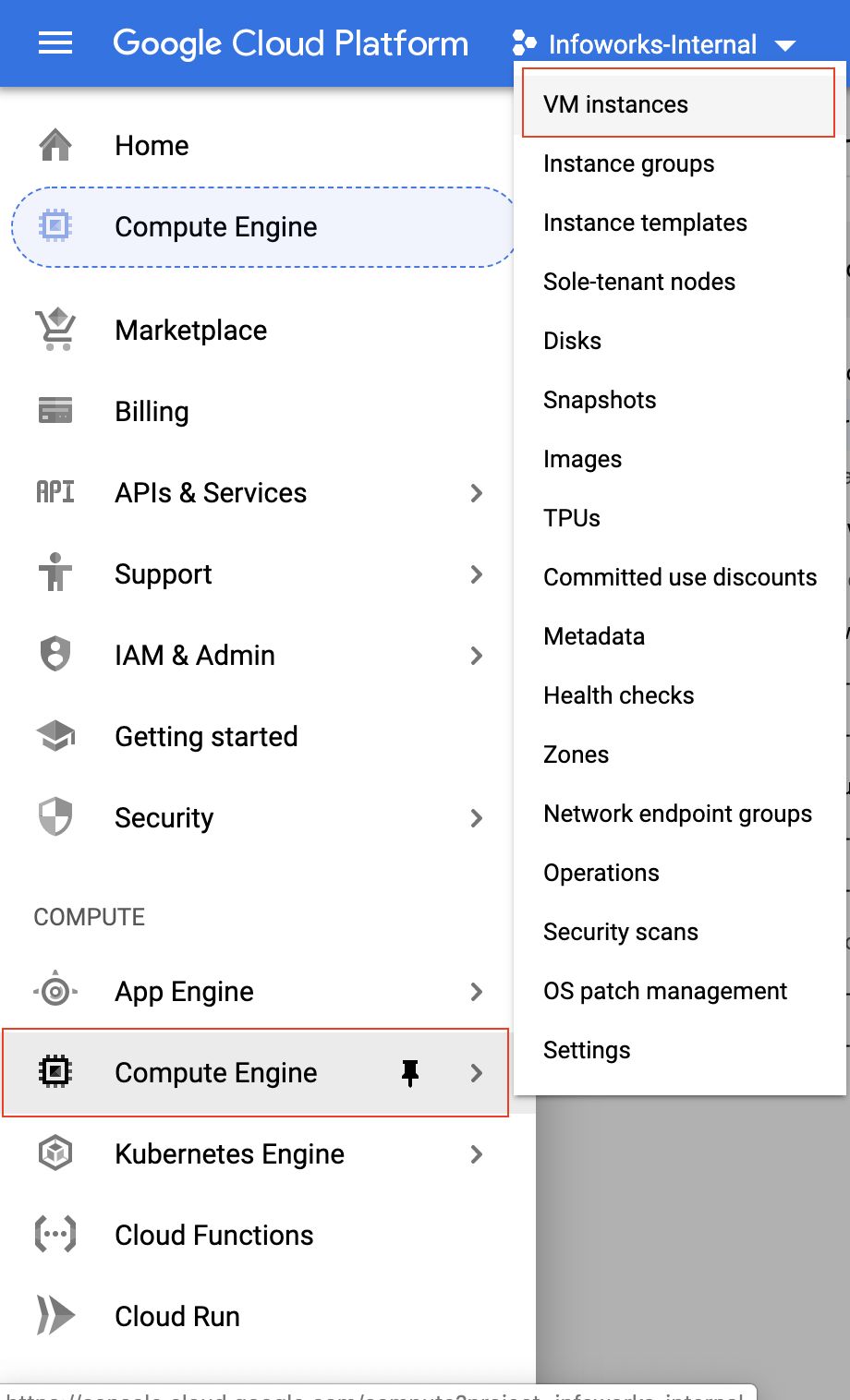

Viewing VM Instances

Login to Google Cloud Console. Click the icon on the top left and click Compute Engine > VM Instances

The list of VM instances in the project are displayed including the instances used by Infoworks.

Metadata Server Access Credentials

The Infoworks Metadata server is hosted on a dedicated VM instance. It hosts a MongoDB, which uses two users: admin and infoworks.

Write to datafoundry-ops@infoworks.io to obtain the default passwords of these users.

Spark Configurations

Perform the following Spark configuration steps post deployment:

Step 1: In the /opt/infoworks/conf/dt _spark _defaults.conf file, do the following:

Step 1.a: Change the following property: spark.yarn.jars file:/usr/ lib/spark/jars/* to spark.yarn.dist.jars local:/usr/lib/spark/jars/,file:/opt/infoworks/lib/dt/jars

Step 1.b: Under the User-supplied properties section, comment the following property: spark.yarn.jars file:/usr/ lib/spark/jars/*

Step 2: In the /opt/infoworks/conf/dt_spark defaults.conf file, add the following property: spark.driver.extraJavaOptions

Step 3: In the /opt/infoworks/conf/conf.properties file, add the following property: dt_classpath_include_unique_jars=true

Troubleshooting Steps

If the deployment fails with the following error: 'Bucket not found: Dataproc-temp-us-43xxxxxx-9xxxx_is not found', create the bucket in the storage resource, and trigger the deployment again.

Getting Help

You can contact Infoworks support for any queries at support@infoworks.io

For more details, refer to our Knowledge Base and Best Practices!

For help, contact our support team!

(C) 2015-2022 Infoworks.io, Inc. and Confidential