Title

Create new category

Edit page index title

Edit category

Edit link

HDFS File Transfer

Infoworks also provides the capability to directly transfer HDFS folders without involving Hive.

Batch HDFS File Transfer

Currently, no User Interface is available for this functionality. But it can be performed using the User Interface by calling a script (shipped with Infoworks installation) from Infoworks orchestrator (Infoworks workflow engine).

The script can be found in the <Infoworks_home>/bin/script/hdfs_transfer folder (generally, the Infoworks home is /opt/infoworks). The hdfs_transfer folder includes the following:

- hdfs_transfer.sh: This script must be executed inside the folder. This script requires the create_partitions.sql and file_transfer.xml files to execute.

- create_partitions.sql: SQL template to create feedback tables at the end of the job.

- file_transfer.xml: XML config file to configure jobs.

Using Script from Command Line

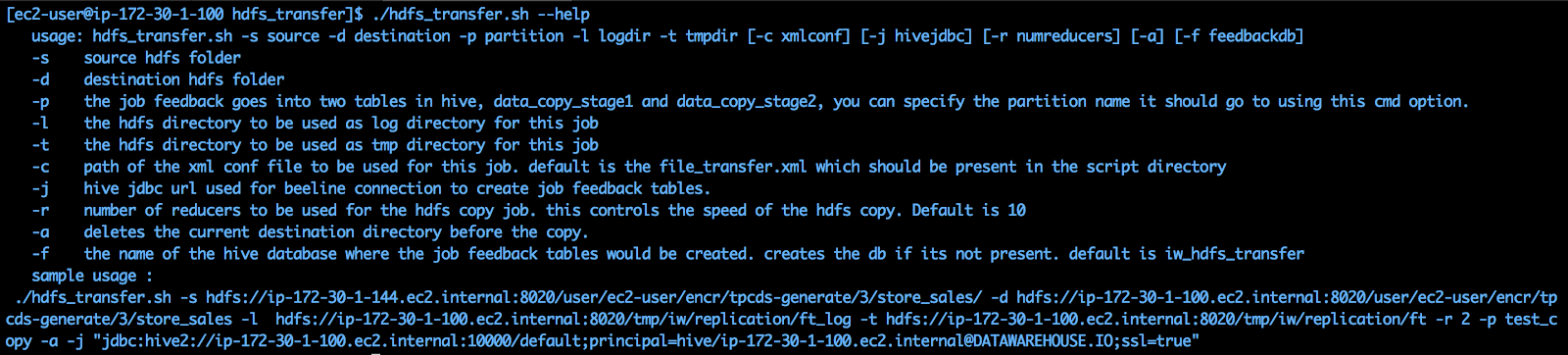

Use the following command for script usage:

./hdfs_transfer.sh --help

The following screen will be displayed:

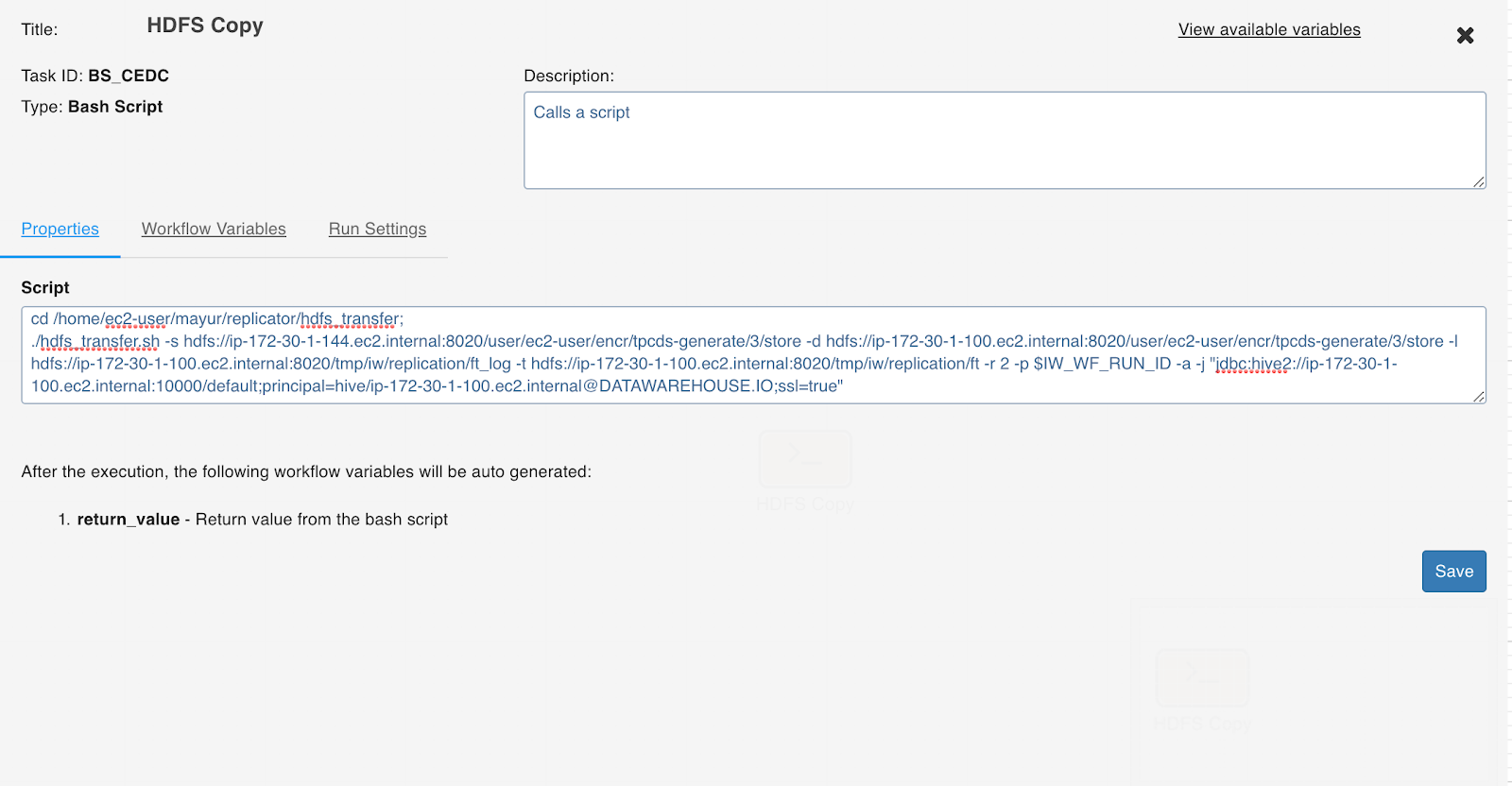

Using Script from Orchestrator

You can perform the following in the script section of the Bash Script node in a workflow (one in each line):

- cd to the <Infoworks_home>/scripts/hdfs_transfer folder.

- Call the hdfs_transfer script.

Feedback Tables

The HDFS transfer task runs in two stages, each stage is a mapreduce job.

- The output of the first job is the list of files to be copied.

- The output of the second job is the list of all files with the copy status. The output of both jobs can be found in two Hive tables. These tables are named as data_copy_stage1 and data_copy_stage2. The Hive database to create the tables, and the partition of the tables where the job feedback must be saved are configurable in the script. It is recommended to run the script using the Infoworks orchestrator and use the workflow run ID as the partition value. The workflow run ID is available in the $IW_WF_RUN_ID variable in the script of the Bash Script node.

Incremental HDFS File Transfer

Incremental changes in the HDFS directories can be handled with HDFS Monitor as it does not depend on Hive. For more details, see the Starting HDFS Monitor section.

Sample File_transfer.xml

<?xml version="1.0" encoding="UTF-8"?> <configuration> <property> <name>mapreduce.job.hdfs-servers.token-renewal.exclude</name> <value>ip-172-30-1-144.ec2.internal</value> </property> <property> <name>infoworks.replicator.distcp.preserve.status</name> <value>rugb</value> </property> <property> <name>infoworks.replicator.skip.crc</name> <value>false</value> </property> <property> <name>infoworks.replication.encryption.zones</name> <value>["/user/hive/warehouse/tpcds_bin_partitioned_parquet_3.db","/user/ec2-user/encr"]</value> </property> <property> <name>use.temp.path</name> <value>false</value> </property> <property> <name>zookeeper.connection.string</name> <value>ip-172-30-1-100.ec2.internal:2181</value> </property> </configuration>For more details, refer to our Knowledge Base and Best Practices!

For help, contact our support team!

(C) 2015-2022 Infoworks.io, Inc. and Confidential